Let me start by saying that I moved away from Ubuntu-based distros a couple years ago. They're an absolute trash company. I used Linux Mint Debian Edition for quite a while, until I acquired a Dell Precision Tower 3620 that had some driver issues. Around that same time I started playing with KDE Plasma 6 and quite loved it. I briefly installed KDE Neon, which was great, but the non-KDE packages were absurdly out of date. I ended up installing Fedora 40 KDE Spin and loved it. It was ridiculously up-to-date. It worked smoothly.

I've been wanting to start playing with some local AI, but just never got around to it. But one day I opened up Firefox and on the Fedora Start Page was a link to an article called Running Generative AI Models Locally with Ollama and Open WebUI. I read down through it, but to be honest, I didn't have the energy for it. But as I got down to the comments there was a comment from 1stn00b that recommended trying Alpaca from Flathub to get up and running "in seconds."

Well screw it. That's just too easy not to try out, right? So I headed to Discover and installed it ... which did take a bit longer than a few seconds. But, still, quick. I downloaded a small model for testing, and it worked! It was slow, but it worked. So I opted to try a larger model ... and the thing was slow. Damn slow. I mean, it makes sense, but this is what you do when you're experimenting. And it wet my whistle. I was ready to head down the rabbit hole.

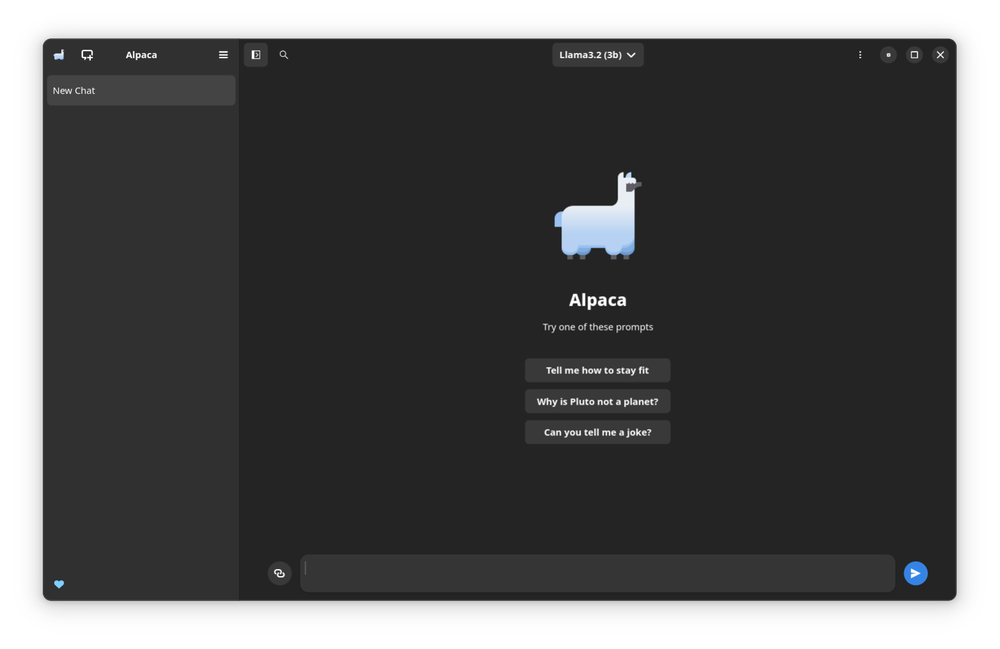

Alpaca window, with an Alpaca character in the middle as well as example prompts.

Alpaca has the ability to use a remote Ollama server, not just the included one, so I setup a new Debian container on my Incus homelab server, connected my desktop Ollama instance to it and once again started downloading some models. To my surprise, it really wasn't any faster.

Well, the primary reason I wanted to even play with AI was for Nextcloud integration, which supports LocalAI, so I setup an additional Debian container for testing. I'm not surprised to say it wasn't any faster.

At this point the next local step was to setup LocalAI on my desktop. It does have an NVIDIA Quadro M4000, but I did not have the proprietary drivers installed to use the GPU. I ran the LocalAI installer and it attempted to install the proprietary drivers ... and failed. So I went to RPMFusion and attempted to install the drivers manually. And I failed. One of my monitors completely stopped working. So I uninstalled the driver and got things working again. Then I tried another manual install ... and I lost display on both monitors. After a couple hours of trying to get back up and running, I just gave up.

Next I downloaded the Nobara KDE with nvidia live ISO and booted to it ... and it booted up with one monitor. The driver wasn't working.

So next I booted up a Pop!_OS alpha ISO with nvidia driver, and it worked. It worked quite well. Well enough that I installed it to a secondary hard drive to toy with. I booted up and installed Alpaca and it worked, and using nvtop I could see it hitting the GPU for processing. So then I installed KDE Plasma, because while COSMIC is coming across nicely, I really, really love KDE, and as I spend so many hours a day at my computer working, I really didn't want to run an alpha release of anything. Sadly, since Pop!_OS is built on an Ubuntu LTS, the version of Plasma is runs was just really outdated.

Finally, I booted up the Kubuntu 24.10 ISO. The first thing I did was head to the driver manager. It showed the proprietary nvidia driver, so I opted to install it on my secondary hard drive. After rebooting and installing the driver and Alpaca and testing, I decided that this is the way I'd go for now.

So I did a final install of Kubuntu on my primary hard drive. I installed the proprietary drivers. I installed Alpaca. I spent more time than I wish I had learning how to add my /home to the /etc/crypttab. Added the secondary drive as well so I could drop models on it.

I won't be staying with this install forever, but it's working for me right now. I'll eventually either tire of playing with AI or enjoy it enough that I'll invest in a better video card. And if that happens and I have one that sets up on Fedora without issue, I'll probably go back.

And as far as playing with AI ... I haven't found any more time. I burned a whole long weekend on this process. Let's hope it's worth it. Hell, it is. Because I enjoy learning more about Linux.

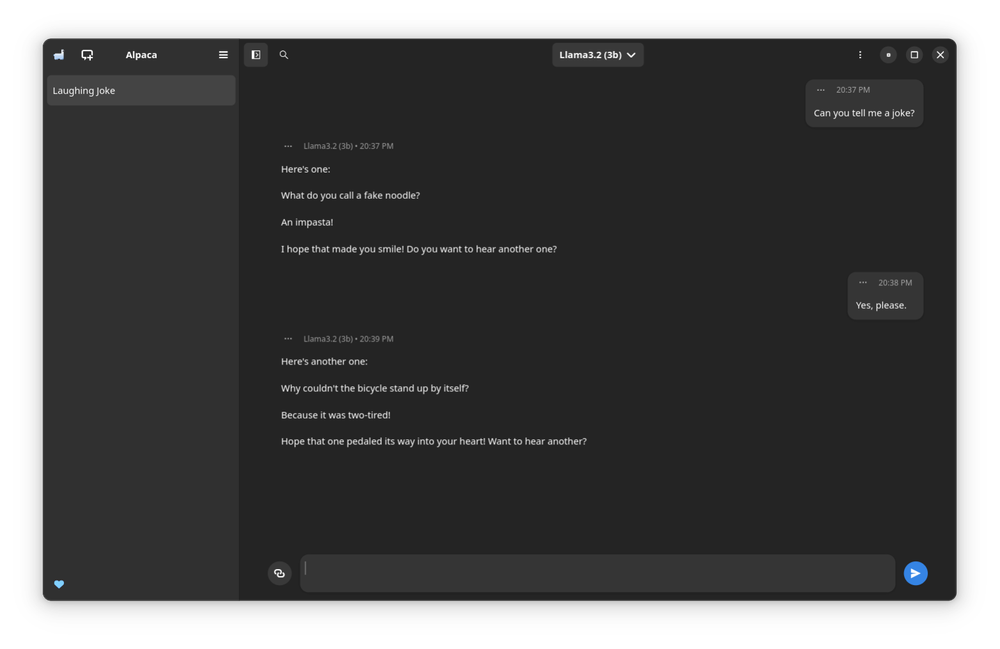

Alpaca window with jokes.

Add new comment